The impact of generative AI on IT infrastructure

” Generative AI and its possibilities ” (4/5) . Artificial Intelligence has brought considerable changes to the IT infrastructure, transforming the way we store, process and use data, and necessitating the management of new artifacts: the models themselves and everything that revolves around them. In this article, we’ll explore the main evolutions that AI has introduced into the field of IT infrastructure.

The data storage revolution in the post-Big Data era

AI has created a massive need for data storage. Pre-trained AI models, such as the famous GPTs (Generative Pre-trained Transformers), require colossal amounts of data for their training. This has driven the adoption of cloud storage solutions, distributed databases and fast storage systems to meet this growing demand.

What impact has this had on Big Data architectures? The nature of the data to be stored and its indexing. Indeed, as we explained in our previous article (Generative AI: be careful not to give it just anything!), the aim in most cases is to produce/predict the next element in a sequence of elements: word, pixel, sound, etc. In order to predict the next element, it is necessary to have a data index. To predict the next element, you need to be able to mathematically compare one element with another.

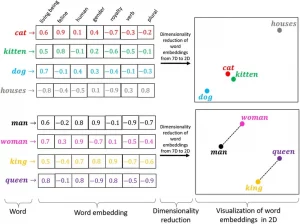

The mathematical synthesis of each element is a simple vector that integrates all the element’s descriptive statistics. For example, the vector of a word would be a list of the probability of occurrence of that word compared with all the others. So each word has its own probability vector in relation to all the others. The very nature of a vector, which is neither a simple INT nor a CHAR :

This led to the need to specifically store and index this new type of data. This is how vector databases emerged. They have the distinctive features of being highly flexible, implementing vector-specific distance calculation functions (cosine distances, Euclidean distances, etc.) and requiring slightly more CPU than conventional databases.

Computing power and specialized processing units

One of the most notable evolutions is the exponential increase in computing power required. AI algorithms, such as those used in deep learning, require considerable processing power to train and deploy high-performance models. To meet this demand, companies have invested in graphics processing units (GPUs) and tensor processing units (TPUs) specifically designed to accelerate AI-related calculations. Companies producing this computing power have seen their sales double from 2022 to 2023 (117% for NVIDIA). The emergence of new, even more specialized computing units, optimized for learning algorithms, is currently being tested. There’s no doubt that, given the sums involved and the speed of obsolescence, it’s preferable to turn to cloud AI computing rather than carrying all these hardware evolutions.

Cloud computing has thus become a pillar of IT infrastructure in the context of AI. Cloud providers now offer AI-specific services, providing scalable computing resources for model training and deployment. This approach has enabled companies to cut costs and gain flexibility.

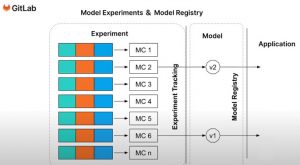

Beyond computing power, the management and indexing of new AI-related artifacts has led to the emergence of new services among already dominant market players. The case of Gitlab is very interesting, as it is one of the leaders in terms of versioning and CI/CD tools. Gitlab had to evolve its indexing technology and operating principle to be able to associate each version with the notion of experiment, which is inherent in the exploration stage of a Data Science project. Gitlab has also integrated a logic for managing models validated following experiments in a dedicated space, the model registry, which facilitates and secures the entire process of putting these models into production, the ML OPS process.

In short, artificial intelligence has brought radical changes to IT infrastructure. The need for computing power and data storage, the adoption of cloud computing and changes to the operating principles of major tools are all revolutions that have redefined the way companies manage their IT resources.

To remain competitive in this new technological ecosystem, organizations must continue to adapt and invest in scalable, flexible IT infrastructures, while fully operating the potential of AI to drive innovation and growth.

Yasser Antonio LEOTE CHERRADI – Practice Manager JEMS