Why you need a maturity model before embarking on generative IA?

On Hugging face, the exchange platform for open source learning models, there are 48,000 Large Language Models (LLMs) and as many ways of using them. Should we choose the most popular? Or the most downloaded? Let’s use the following analogy: if you’re a farmer, do you use a Ferrari to plough your fields? Or: you’re a data architect, do you use a tractor to get to work? No. For the IAG it’s the same. Knowing/being able to identify your needs is crucial. And to do this, we offer you a maturity model.

LLM basics: Understanding before applying

It can’t be said often enough: there’s nothing magical about Generative AI. LLMs are programs based on statistical training which, when presented with a sentence, will suggest the next most likely word(s) in that context. From a certain point of view, we’re at the “café du commerce” level, where an individual would repeat what he or she heard and memorized from a radio message that very morning.

Interestingly, the ability of LLMs to “reason” has emerged as a significant enhancement to learning, i.e. to the “memory” of learning. In itself, this is remarkable. However, it doesn’t necessarily provide the answers expected in the specific context of a project or company. We can therefore distinguish between different ways of interacting with LLMs, from the crudest use of this knowledge base to a level that makes greater use of reasoning skills.

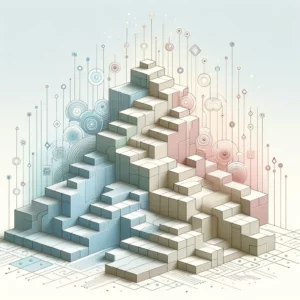

This corresponds to different levels of use and maturity.

Level 1: “The Art of Prompt Engineering

The first level corresponds to a basic, direct use of LLMs. This is the level of the generic question. This is the famous “prompt engineering”, the art of asking the machine the right questions. It has now been established that, depending on how the questions are asked, the quality of the answers will not be the same. Generally speaking, the answer will depend on the training corpus and the LLM’s specialization, as in the case of code generation. The request can then be defined as:

- “Answer this question based on what you’ve learned in training”.

This is obviously the simplest solution, but it is highly dependent on the organization behind the LLM.

Level 2 : Beyond bases with RAG

The second level is a solution in which the prompt is enriched with information. This is the famous RAG (Retrieval Augmented Generation). In practice, this amounts to saying to the machine:

- “Answer this question based on what you’ve learned in training AND on the documents I give you”.

Many Chatbots rely on this technique. The search for relevant documents is based on vector databases that identify text extracts that are semantically close to the question.

This method presupposes the prior vectorization of the knowledge base, i.e. the mathematical representation of texts and questions. By measuring the (mathematical) distance, it is possible to find the document providing the most relevant elements for the answer.

A variant of this enrichment is fine-tuning. Although very few organizations are capable of aligning resources like those of Meta or OpenAI, low-cost techniques do exist. As with any neural network, these involve freezing the weights of the lower layers and working only on the upper (final) layers, often with reduced mathematical precision. In a way, it’s like a trainee learning business-specific notions “on top” of academic knowledge.

Reducing calculation precision multiplies the number of operations possible on a given processor. The gains in computing speed are considerable (often by a factor of 10 to 20) and will enable you to “teach” the LLM your organization’s key concepts.

Level 3: Dynamic integration and information retrieval

The third level is where the LLM acquires a further level of competence. Here, a typical question might be:

- “Answer this question based on what you’ve learned in training AND go get the information”.

Information retrieval will be based on “agents” (APIs for simplicity’s sake), for which we’ll have described how to query. The LLM can then be connected to various sources, such as databases, or even sensors as part of an IoT-type project. This will give a dynamic, real-time dimension to processing, as opposed to fixed knowledge.

Typically, LangChain or LlamaIndex-type frameworks will be used to orchestrate the various information searches.

Level 4: Connecting concepts for deeper insights

Finally, the fourth level is undoubtedly the most advanced. The idea here is to make full use of the LLM’s capacity for “understanding”, and more specifically its ability to extract concepts and follow a guiding thread, the closest English expression being “connecting the dots”. An example of application is CSR, where it can be important to know one’s tier n suppliers in order to find out if they are linked to dubious ethical practices. Another example is risk assessment and, more generally, any search for information that requires “bouncing” from one piece of information to the next. The typical question here would be:

- “Answer this question by relying on what you’ve learned in training AND by recursively searching for information along such and such an axis”.

Here, the dynamic aspect is further reinforced. It’s not just a question of the data, but of the whole process, which will evolve as information is found. At this stage, it’s important to specify the research axes. Combinatorics can quickly become explosive without producing convincing results. It’s better to focus LLM on what will create value, according to the needs of each field.

For example, for a CSR risk analysis, LLM could be asked to “dig” along ecological or ethical lines. In the case of an industrial company, we might focus on the links between subcontractors and the origin of components (in the case of ITAR sensitivity, for example).

In conclusion

So, beyond the media buzz generated by LLMs, the question of how to use generative AI ultimately comes down, as it often does, to the mastery of information and its use. Various options are therefore available to enrich the LLM knowledge base and take into account the specificities of the company.

Patrick Chable – Practice Manager JEMS